ChatGPT vs Eliza - round 2

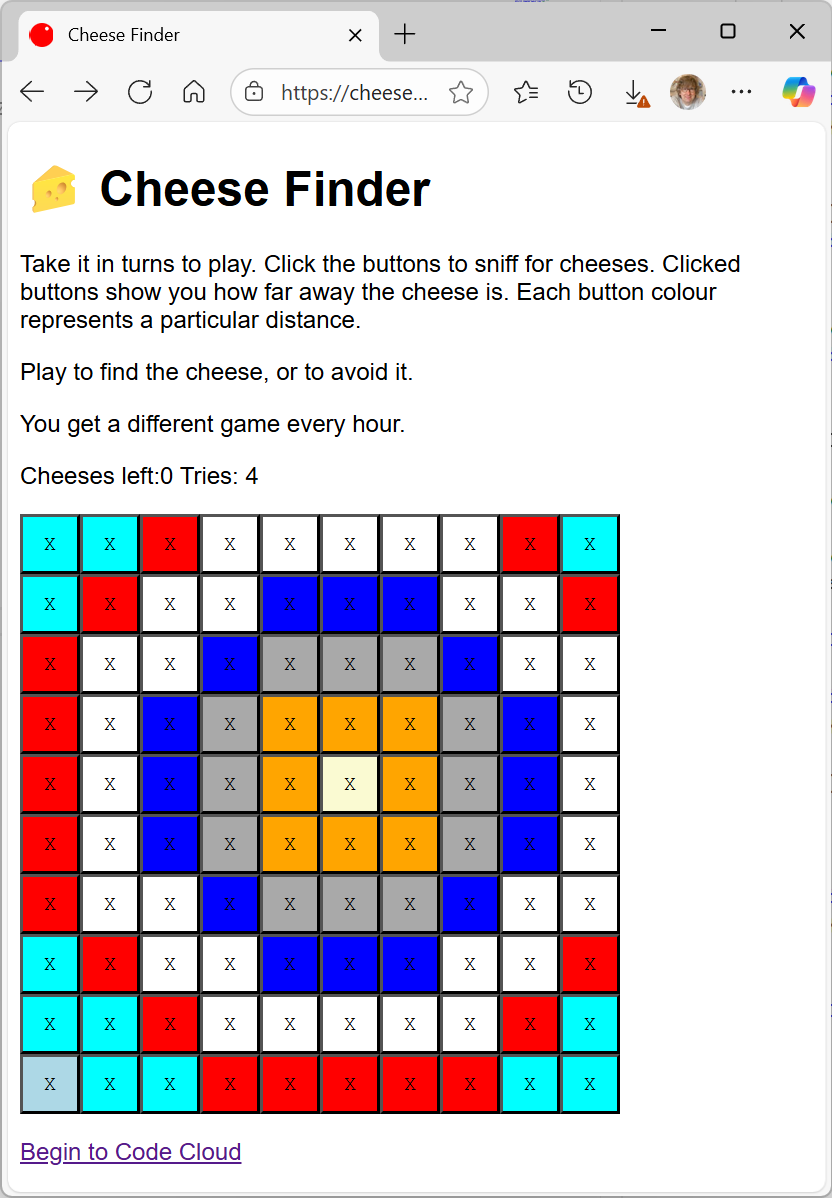

/After yesterday's post I asked ChatGPT to compare ChatGPT and Eliza. It wrote this:

ChatpGPT and Eliza are both AI-powered conversational systems, but they are quite different in terms of their capabilities and intended uses.

ChatpGPT is a state-of-the-art language model developed by OpenAI that is capable of generating human-like text in response to input from a user. It can be used for a variety of purposes, including generating creative writing, responding to customer inquiries, or engaging in conversation with users.

Eliza, on the other hand, is a much simpler program that was developed in the 1960s. It uses a set of rules and patterns to generate responses to user input, and is intended primarily as a demonstration of how computers can simulate conversation. Unlike ChatpGPT, Eliza is not capable of generating original text and is only able to produce responses based on the rules that it has been programmed with.

Overall, ChatpGPT is a much more advanced and capable system than Eliza, but Eliza has its own unique place in the history of AI.

You could believe a person wrote this, just like you could believe Eliza was a psychiatrist. But if you look closer you see a well put together pastiche of a few facts gleaned from the web, plus one paragraph straight from the sales brochure. It’s cleverly done. But I still don’t think it’s clever.